Compressiblity of Secure Scuttlebutt RPC messages

A while ago I started investigating problems related to high data usage in Secure Scuttlebutt clients. As a result I ran several benchmarks to investigate whether Secure Scuttlebutt RPC messages are compressible. This article sums up my findings as I believe they may be useful for futher analysis of this problem.

The code for the benchmark is available here if you want to browse it while reading the article.

Compression algorithms

My goal was to select multiple compression algorithms in order to compare their performance on Secure Scuttlebutt RPC data. I selected the following algorithms for this benchmark due to the fact that they were modern and already widely used in the industry by companies dealing with large volumes data:

I also included the following algorithms in the benchmark for comparison purposes although it was my belief that their relatively slower performance may be problematic for example in the context of running SSB clients on mobile devices:

Data sets

For this benchmark I recorded RPC messages being sent and received during actual sessions of using SSB clients. The idea was to realistically reflect network traffic which is experienced during normal usage instead of creating a synthetic benchmark.

I captured RPC messages exchanged during two phases of using SSB clients. The first set of messages was captured while the initial heavy replication during which the client downloads a large volume of feed messages was occuring. The second set of messages was captured after the initial period of heavy replication. I refer to those two sets as "many feed messages" and "few feed messages" respectively.

To review the captured data I analysed and then categorized the messages exchanged in those sessions in the following categories:

- "Feed messages" - messages which are part of someone's feed being exchanged due to

createHistoryStreamrequests or EBT sessions - "

createHistoryStreamrequests" - self explanatory - "EBT notes" - messages containing EBT session notes

- Other - all other messages

A random sample of messages was selected for the benchmark to speed up its execution. Below you can see the analysis of each data set.

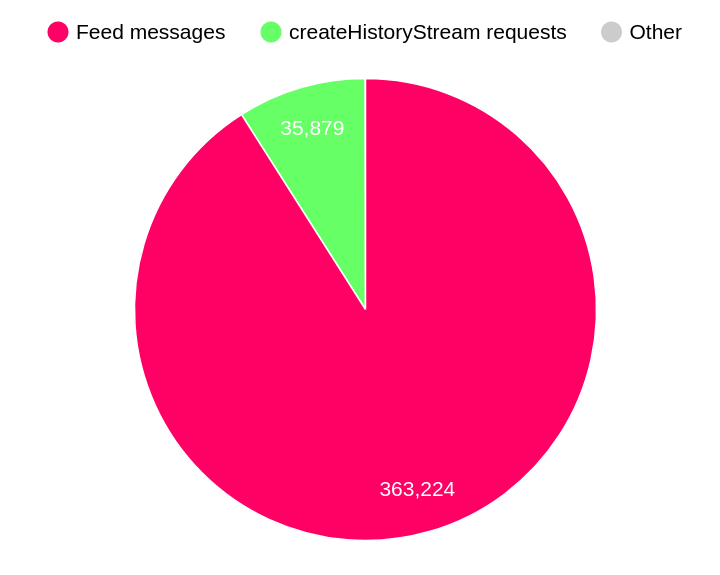

"Many feed messages" set

The number of messages in this set by category:

- Feed messages: 363224

createHistoryStreamrequests: 35879- Other: 196

Out of those messages a random sample of 9980 messages was selected.

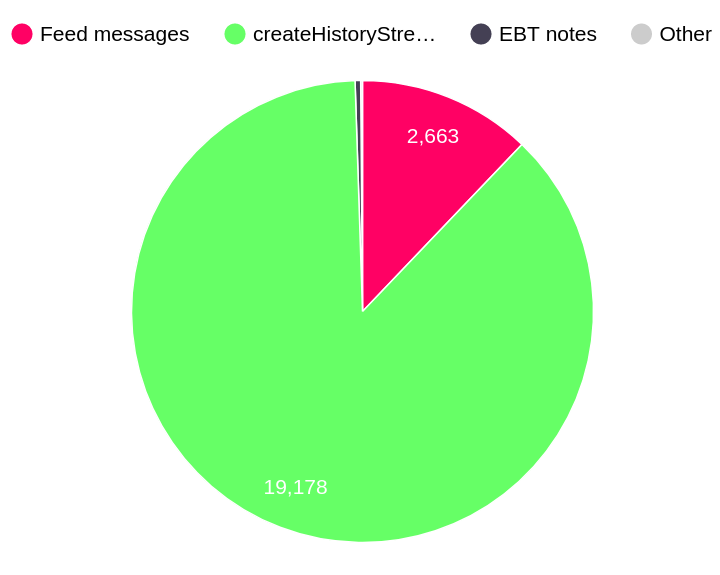

"Few feed messages" set

The number of messages in this set by category:

- Feed messages: 2663

createHistoryStreamrequests 19178- EBT notes: 85

- Other: 28

Out of those messages a random sample of 10977 messages was selected.

Batching messages

I ran three benchmarks. The first benchmark compressed messages individually while the two other ones compressed them in batches of 10 and 100 messages. I decided to choose this way of performing the benchmarks as the RPC messages are usually sent in large batches which is the perfect opportunity for optimizing compression.

Results

The x axis of the graphs below shows the tested database systems. They y axis is the data compression ratio. Larger compress ratio is better. If the result is below 1 then the compressed data is larger than uncompressed data.

"Many feed messages" set

.png)

Brotli (best) = 1.38 ratio

Brotli (default) = 1.36 ratio

Deflate (best) = 1.28 ratio

Deflate (default) = 1.23 ratio

LZMA2 = 1.20 ratio

ZSTD (best) = 1.19 ratio

ZSTD (default) = 1.19 ratio

Deflate (fastest) = 1.12 ratio

ZSTD (fastest) = 1.12 ratio

LZMA = 1.11 ratio

Brotli (fastest) = 1.07 ratio

Snappy = 1.02 ratio

S2 (best) = 1.00 ratio

S2 (default) = 0.97 ratio

S2 (better) = 0.96 ratio

.png)

Brotli (best) = 1.91 ratio

Brotli (default) = 1.84 ratio

Deflate (best) = 1.79 ratio

Deflate (default) = 1.77 ratio

ZSTD (best) = 1.77 ratio

ZSTD (default) = 1.75 ratio

LZMA2 = 1.75 ratio

LZMA = 1.73 ratio

ZSTD (fastest) = 1.73 ratio

Deflate (fastest) = 1.72 ratio

Brotli (fastest) = 1.61 ratio

S2 (best) = 1.43 ratio

S2 (default) = 1.40 ratio

Snappy = 1.39 ratio

S2 (better) = 1.37 ratio

.png)

Brotli (best) = 2.48 ratio

Brotli (default) = 2.41 ratio

ZSTD (best) = 2.38 ratio

Deflate (best) = 2.37 ratio

ZSTD (default) = 2.34 ratio

ZSTD (fastest) = 2.32 ratio

LZMA2 = 2.31 ratio

LZMA = 2.31 ratio

Deflate (default) = 2.31 ratio

Deflate (fastest) = 2.22 ratio

Brotli (fastest) = 2.12 ratio

S2 (best) = 1.82 ratio

S2 (better) = 1.77 ratio

Snappy = 1.77 ratio

S2 (default) = 1.75 ratio

"Few feed messages" set

.png)

Brotli (default) = 1.28 ratio

Brotli (best) = 1.25 ratio

Deflate (best) = 1.21 ratio

LZMA2 = 1.11 ratio

ZSTD (best) = 1.10 ratio

Deflate (default) = 1.10 ratio

ZSTD (default) = 1.10 ratio

Deflate (fastest) = 1.09 ratio

Brotli (fastest) = 1.08 ratio

Snappy = 1.04 ratio

ZSTD (fastest) = 1.04 ratio

LZMA = 0.99 ratio

S2 (best) = 0.93 ratio

S2 (better) = 0.93 ratio

S2 (default) = 0.92 ratio

.png)

Brotli (best) = 2.01 ratio

Brotli (default) = 1.95 ratio

Deflate (best) = 1.90 ratio

Deflate (default) = 1.88 ratio

LZMA2 = 1.85 ratio

Deflate (fastest) = 1.85 ratio

ZSTD (best) = 1.84 ratio

LZMA = 1.82 ratio

ZSTD (default) = 1.82 ratio

ZSTD (fastest) = 1.80 ratio

Brotli (fastest) = 1.63 ratio

Snappy = 1.54 ratio

S2 (best) = 1.53 ratio

S2 (better) = 1.51 ratio

S2 (default) = 1.50 ratio

.png)

Brotli (best) = 2.51 ratio

Brotli (default) = 2.43 ratio

Deflate (best) = 2.38 ratio

ZSTD (best) = 2.37 ratio

ZSTD (default) = 2.33 ratio

Deflate (default) = 2.32 ratio

LZMA2 = 2.32 ratio

LZMA = 2.32 ratio

ZSTD (fastest) = 2.30 ratio

Deflate (fastest) = 2.26 ratio

Brotli (fastest) = 2.09 ratio

S2 (best) = 1.83 ratio

Snappy = 1.82 ratio

S2 (better) = 1.81 ratio

S2 (default) = 1.77 ratio

Conclusions

As expected the compression ratios achieved through compressing multiple messages together are overall higher. This is not unexpected and batching messages for compression is usually a good idea.

Intrestingly using S2 has proven to lead to larger message sizes when compressing messages individually. When compressing messages in batches S2 performs similar or better to Snappy. The worst case scenario for this algorithm is however concerning.

Snappy was extremly fast (as expected - speed is what it was designed for) however underperformed and struggeled with individually compressing messages.

I didn't include performance results here as the benchmark was not really optimized however as expected DEFLATE and LZMA/LZMA2 were the slowest algorithms. Algorithms such as ZSTD and Brotli achieved better compression ratios and better compression performance.

ZSTD achieved good compression ratios and good performance. Performance was much slower at the highest compression level without achieving a much larger compression ratio therefore I think it would make no sense to run ZSTD on this data at a level higher than default.

Brotli achieved better top compression ratios than ZSTD at the cost of performance. Brotli features more compression levels however and when fine-tuned to achieve compression ratios similar to those of ZSTD's default level it sometimes seemed to compress faster or at least have similar performance.

Overall in my opinion the most interesting algorithms which could be investigated further for this application out of those selected are ZSTD and Brotli.